Human Error

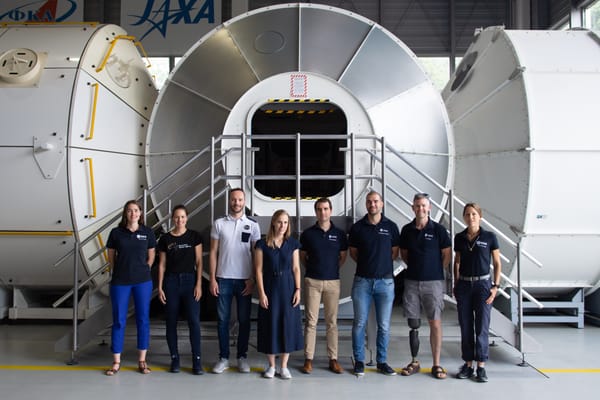

Lesson 3 from ESA Astronaut Training

✨ This post is from the archive 🏛️. Migrated from Substack when we moved our writing to Ghost. ✨

It was time to get into the uncomfortable stuff.

After learning about how humans process information (see Day 2) we could then look at how humans might make errors in processing. And the impact that has on space operations, as well as other safety critical industries such as aviation, oil and gas, rail, healthcare.

Never mind the impact it has on our everyday life! None of us is perfect.

Understanding human error is NOT about pointing fingers; it's about recognising the deeper systemic issues at play.

🔍 Key takeaways

1. Old vs. New View: The old view sees human error as a (often individual) human problem. The new view sees it as a system problem—a symptom of deeper issues.

2. Reason’s Swiss Cheese Model: Highlights how errors can occur at multiple stages, from latent conditions to active failures.

3. Rasmussen’s Model: Outlines the three levels of cognitive control—skill-based, rule-based, and knowledge-based.

4. Reason’s GEMS Model: Identifies different types of errors—slips, lapses, mistakes, and violations.

5. Cognitive Biases: Biases like hindsight bias, and cognitive fixation can lead to human error.

Why is this crucial? For astronauts, understanding human error means better preparation, safer operations, and the ability to mitigate risks that could lead to mission failure or accidents.

This summary is just a glimpse into a comprehensive lesson designed to enhance safety and efficiency in space missions.